- 話題1/3

23k 熱度

14k 熱度

32k 熱度

8k 熱度

19k 熱度

- 置頂

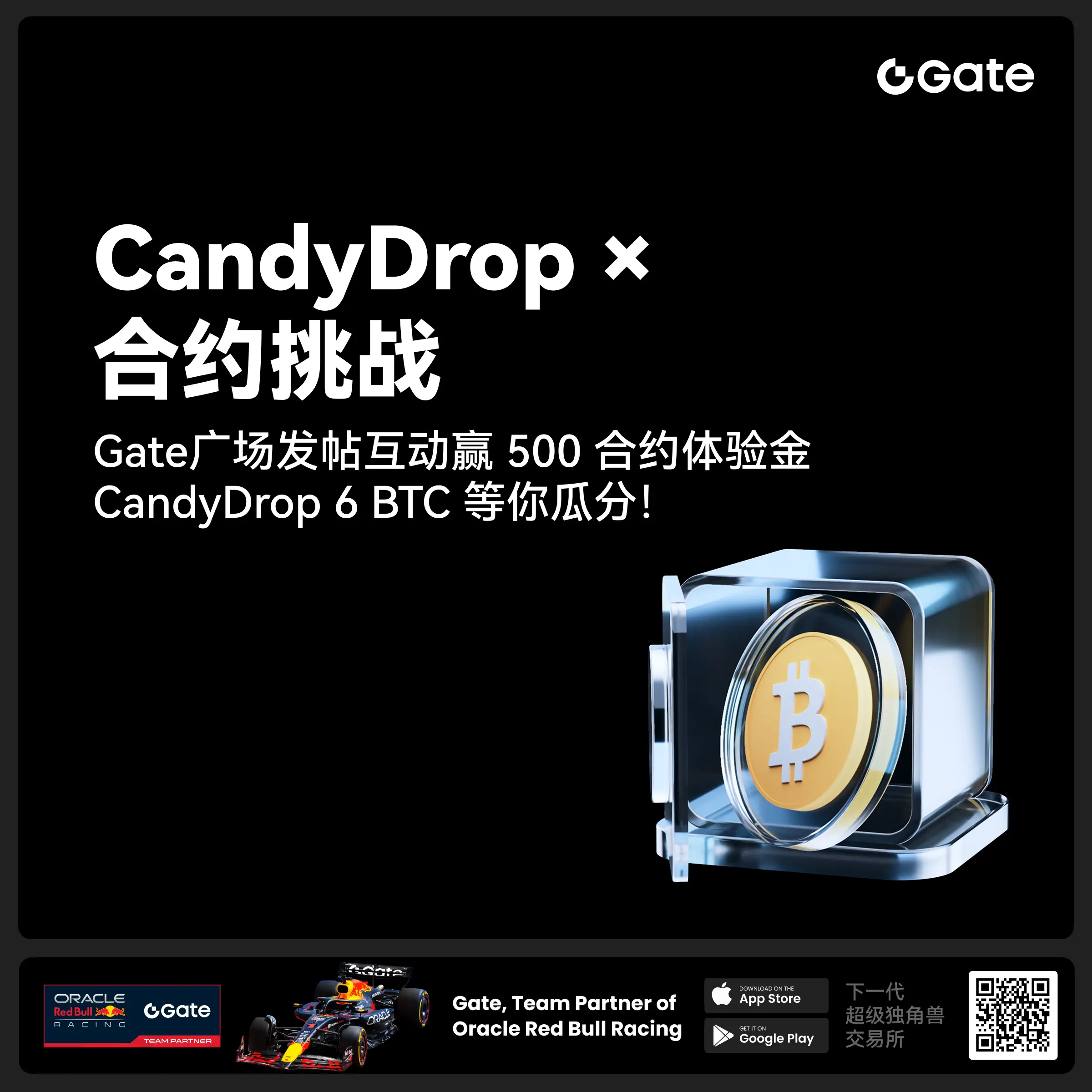

- 🎉 #CandyDrop合约挑战# 正式開啓!參與即可瓜分 6 BTC 豪華獎池!

📢 在 Gate 廣場帶話題發布你的合約體驗

🎁 優質貼文用戶瓜分$500 合約體驗金券,20位名額等你上榜!

📅 活動時間:2025 年 8 月 1 日 15:00 - 8 月 15 日 19:00 (UTC+8)

👉 活動連結:https://www.gate.com/candy-drop/detail/BTC-98

敢合約,敢盈利

- 🎉 攢成長值,抽華爲Mate三折疊!廣場第 1️⃣ 2️⃣ 期夏季成長值抽獎大狂歡開啓!

總獎池超 $10,000+,華爲Mate三折疊手機、F1紅牛賽車模型、Gate限量週邊、熱門代幣等你來抽!

立即抽獎 👉 https://www.gate.com/activities/pointprize?now_period=12

如何快速賺成長值?

1️⃣ 進入【廣場】,點擊頭像旁標識進入【社區中心】

2️⃣ 完成發帖、評論、點讚、發言等日常任務,成長值拿不停

100%有獎,抽到賺到,大獎等你抱走,趕緊試試手氣!

截止於 8月9日 24:00 (UTC+8)

詳情: https://www.gate.com/announcements/article/46384

#成长值抽奖12期开启#

- 📢 Gate廣場 #NERO发帖挑战# 秀觀點贏大獎活動火熱開啓!

Gate NERO生態周來襲!發帖秀出NERO項目洞察和活動實用攻略,瓜分30,000NERO!

💰️ 15位優質發帖用戶 * 2,000枚NERO每人

如何參與:

1️⃣ 調研NERO項目

對NERO的基本面、社區治理、發展目標、代幣經濟模型等方面進行研究,分享你對項目的深度研究。

2️⃣ 參與並分享真實體驗

參與NERO生態周相關活動,並曬出你的參與截圖、收益圖或實用教程。可以是收益展示、簡明易懂的新手攻略、小竅門,也可以是行情點位分析,內容詳實優先。

3️⃣ 鼓勵帶新互動

如果你的帖子吸引到他人參與活動,或者有好友評論“已參與/已交易”,將大幅提升你的獲獎概率!

NERO熱門活動(帖文需附以下活動連結):

NERO Chain (NERO) 生態周:Gate 已上線 NERO 現貨交易,爲回饋平台用戶,HODLer Airdrop、Launchpool、CandyDrop、餘幣寶已上線 NERO,邀您體驗。參與攻略見公告:https://www.gate.com/announcements/article/46284

高質量帖子Tips:

教程越詳細、圖片越直觀、互動量越高,獲獎幾率越大!

市場見解獨到、真實參與經歷、有帶新互動者,評選將優先考慮。

帖子需原創,字數不少於250字,且需獲得至少3條有效互動

- 🎉 親愛的廣場小夥伴們,福利不停,精彩不斷!目前廣場上這些熱門發帖贏獎活動火熱進行中,發帖越多,獎勵越多,快來GET你的專屬好禮吧!🚀

1️⃣ #GateLaunchpad上线IKA# |IKA認購體驗

在Gate廣場帶話題曬出你的IKA Launchpad認購體驗,4位幸運分享者講瓜分$200分享獎池!

詳情 👉️ https://www.gate.com/post/status/12566958

2️⃣ #ETH冲击4800# |行情分析預測

大膽發帖預測ETH走勢,展示你的市場洞察力!10位幸運用戶將平分0.1 ETH 獎勵!

詳情 👉️ https://www.gate.com/post/status/12322403

3️⃣ #创作者活动第二期# |ZKWASM話題

在廣場或推特發布與 ZKWASM 或其交易活動相關的原創內容,瓜分4,000枚ZKWASM!

詳情 👉️ https://www.gate.com/post/status/12525794

4️⃣ #Gate广场征文活动第二期# |ERA話題

談談你對ERA的觀點/體驗,參與並推廣活動,700 ERA大獎等你贏!

詳情 👉️ https://www.gate.com/post/status/12361653

5️⃣ #MBG任务挑战# |MBG話題

分享你對MBG的洞察,積極參與和推廣MBG活動,20位小 - 🎉Gate 2025 上半年社區盛典:內容達人評選投票火熱進行中 🎉

🏆 誰將成爲前十位 #Gate广场# 內容達人?

投票現已開啓,選出你的心頭好

🎁贏取 iPhone 16 Pro Max、限量週邊等好禮!

📅投票截止:8 月 15 日 10:00(UTC+8)

立即投票: https://www.gate.com/activities/community-vote

活動詳情: https://www.gate.com/announcements/article/45974

AI搞定谷歌驗證碼,最新多模態大模型比GPT-4V空間理解更準確

原文來源:量子位

谷歌人機驗證已經攔不住AI了!

最新多模態大模型,能輕鬆找到圖中所有交通信號燈,還準確圈出了具體位置。

比如下圖中非常細小的部件(region 1),它也可以分辨出來是避震。

“點一點”圖像大模型都懂

Ferret解決的核心問題是讓引用(referring)和定位(grounding)兩方面空間理解能力更加緊密。

引用是指讓模型準確理解給定區域的語義,也就是指一個位置它能知道是什麼。

定位則是給出語義,讓模型在圖中找到對應目標。

對於人類來說,這兩種能力是自然結合的,但是現有很多多模態大模型卻只會單獨使用引用和定位。

這樣一來,模型就能分辨出邊界框幾乎一樣的物件。

比如下圖中兩個物體的情況,如果只用離散邊界框,模型會感到很“困惑”。 和連續的自由形狀混合表示相結合,能很好解決這一問題。

因此,Ferret可以接受各種區域輸入,如點、邊界框和自由形狀,並理解其語義。

在輸出中,它可以根據文本自動生成每個定位物件的座標。

Ferret結合了離散座標和連續特徵,形成了一種混合區域表示。

這種表示方法旨在解決表示各種形狀和格式的區域的挑戰,包括點、邊界框和自由形狀。

離散座標中每個座標都被量化為一個目標框的離散座標,這種量化確保了模型對不同圖像大小的魯棒性。

而連續特徵則由空間感知視覺採樣器提取,它利用二進位掩碼和特徵圖在ROI內隨機採樣點,並通過雙線性插值獲得特徵。

這些特徵經過一個由3D點雲模型啟發的空間感知模塊處理後,被濃縮成一個單一的向量, 並映射到大型語言模型(LLM)進行下一步處理。

這個數據集包含1.1M個樣本,涵蓋了個體物件、對象之間的關係、特定區域的描述以及基於區域的複雜推理等四個主要類別。

GRIT數據集包括了從公共數據集轉換而來的數據、通過ChatGPT和GPT-4生成的指令調整數據,並額外提供了95K個困難的負樣本以提高模型的魯棒性。

Ferret模型在LLaVA-Bench和Ferret-Bench上進行評估,在所有任務中都表現出色,特別是在需要指代和視覺grounding的三個新任務上,Ferret的表現很出色。

全華人團隊

Ferret大模型由蘋果AI/ML和哥倫比亞大學研究團隊共同帶來,全華人陣容。

有昊軒和張昊天為共同一作。

有昊軒現在為哥倫畢業大學計算機科學博士,畢業後將加入蘋果AI/ML團隊。 2018年從西安電子科技大學本科畢業。

主要研究方向為視覺語言理解、文本-圖像生成和視覺語言。

在加入蘋果之前,張昊天在華盛頓大學獲得博士學位,本科畢業於上海交通大學。

他是GLIP/GLIPv2的主要作者之一,GLIP曾獲得CVPR2022的Best Paper Award的提名。

論文位址: