- Topic

39k Popularity

23k Popularity

47k Popularity

17k Popularity

46k Popularity

19k Popularity

7k Popularity

4k Popularity

96k Popularity

29k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

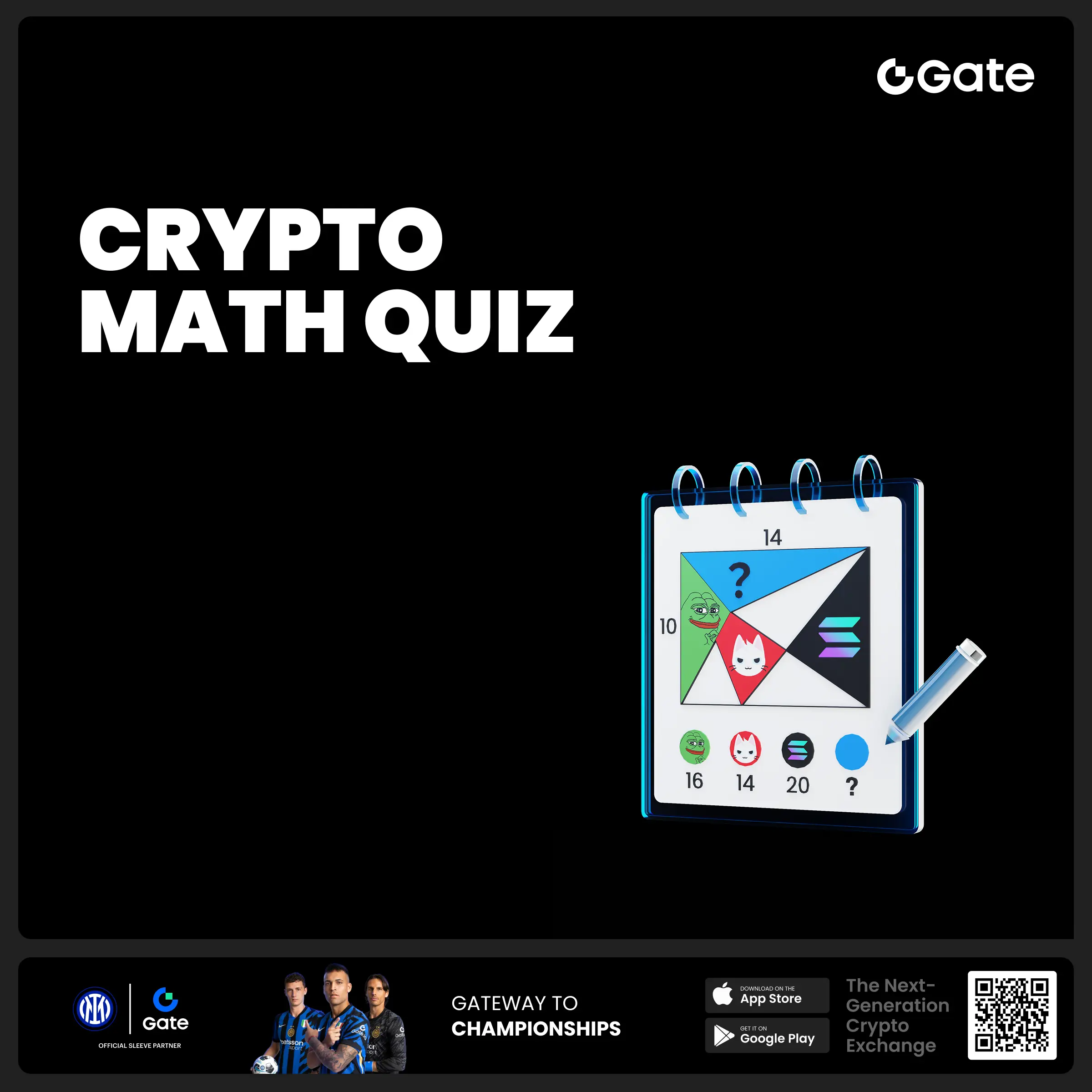

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 #Gate Alpha 3rd Points Carnival & ES Launchpool# Joint Promotion Task is Now Live!

Total Prize Pool: 1,250 $ES

This campaign aims to promote the Eclipse ($ES) Launchpool and Alpha Phase 11: $ES Special Event.

📄 For details, please refer to:

Launchpool Announcement: https://www.gate.com/zh/announcements/article/46134

Alpha Phase 11 Announcement: https://www.gate.com/zh/announcements/article/46137

🧩 [Task Details]

Create content around the Launchpool and Alpha Phase 11 campaign and include a screenshot of your participation.

📸 [How to Participate]

1️⃣ Post with the hashtag #Gate Alpha 3rd - 🚨 Gate Alpha Ambassador Recruitment is Now Open!

📣 We’re looking for passionate Web3 creators and community promoters

🚀 Join us as a Gate Alpha Ambassador to help build our brand and promote high-potential early-stage on-chain assets

🎁 Earn up to 100U per task

💰 Top contributors can earn up to 1000U per month

🛠 Flexible collaboration with full support

Apply now 👉 https://www.gate.com/questionnaire/6888

Can AI understand what it generates? After experiments on GPT-4 and Midjourney, someone solved the case

Article source: Heart of the Machine

Edit: Large plate of chicken, egg sauce

From ChatGPT to GPT4, from DALL・E 2/3 to Midjourney, generative AI has garnered unprecedented global attention. The potential of AI is huge, but great intelligence can also cause fear and concern. Recently, there has been a fierce debate on this issue. First, the Turing winners "scuffled", and then Andrew Ng joined in.

In the field of language and vision, today's generative models can be output in a matter of seconds and can challenge even experts with years of skills and knowledge. This seems to provide a compelling motivation for the claim that models have surpassed human intelligence. However, it is also important to note that there are often basic errors of comprehension in the output of the model.

In this way, a paradox seems to emerge: how do we reconcile the seemingly superhuman abilities of these models with the fundamental errors that persist that most humans can correct?

Recently, the University of Washington and the Allen Institute for AI jointly released a paper to study this paradox.

This paper argues that this phenomenon occurs because the capability configuration in today's generative models deviates from the human intelligence configuration. This article proposes and tests the paradoxical hypothesis of generative AI: generative models are trained to directly output expert-like results, a process that skips the ability to understand the ability to generate that quality output. However, for humans, this is very different, and basic understanding is often a prerequisite for expert-level output capabilities.

In this paper, the researchers test this hypothesis through controlled experiments and analyze the generative model's ability to generate and understand text and vision. In this article, we will first talk about the "understanding" conceptualization of generative models from two perspectives:

The researchers found that in selective evaluation, the model often performed as well as or better than humans in the generation task setting, but in the discriminant (understanding) setting, the model performed less than humans. Further analysis shows that compared with GPT-4, human discrimination ability is more closely related to generative ability, and human discrimination ability is more robust to adversarial input, and the gap between model and human discrimination ability increases with the increase of task difficulty.

Similarly, in interrogative evaluations, while models can produce high-quality outputs across different tasks, researchers have observed that models often make mistakes in answering questions about these outputs, and that the model's comprehension is again lower than that of humans. This article discusses a range of potential reasons for the divergence between generative models and humans in terms of capacity configuration, including model training goals, the size and nature of inputs.

The significance of this research is that, first of all, it means that existing concepts of intelligence derived from human experience may not be generalizable to AI, and even though AI's capabilities seem to mimic or surpass human intelligence in many ways, its capabilities may be fundamentally different from the expected patterns of humans. On the other hand, the findings of this paper also suggest caution when studying generative models to gain insight into human intelligence and cognition, as seemingly expert-level human-like outputs may obscure non-human mechanisms.

In conclusion, the generative AI paradox encourages people to study models as an interesting antithesis of human intelligence, rather than as a parallel antithesis.

"The generative AI paradox highlights the interesting notion that AI models can create content that they themselves may not fully understand. This raises the potential problems behind the limitations of AI's understanding and its powerful generative capabilities." Netizens said.

What is the Generative AI Paradox

Let's start by looking at the generative AI paradox and the experimental design to test it.

Generative models appear to be more effective at acquiring generative capabilities than comprehension, in contrast to human intelligence, which is often more difficult to acquire.

To test this hypothesis, an operational definition of various aspects of the paradox is required. First, for a given model and task t, with human intelligence as a baseline, what it means to be "more effective" than to understand ability. Using g and u as some of the performance indicators for generation and comprehension, the researchers formalized the generative AI paradox hypothesis as:

The operational definition of generation is simple: given a task input (question/prompt), generation is all about generating observable content to satisfy that input. As a result, performance g (e.g., style, correctness, preference) can be evaluated automatically or by humans. While comprehension is not defined by a few observable outputs, it can be tested by clearly defining its effects:

These definitions of understanding provide a blueprint for evaluating the "generative AI paradox" and allow researchers to test whether Hypothesis 1 holds true across different patterns, tasks, and models.

When models can be generated, can they be discriminated? **

First, the researchers performed a side-by-side performance analysis of the variants of the generative task and the discriminative task in the selective evaluation to evaluate the model's generation and comprehension ability in language and visual modes. They compared this generation and discrimination performance to humans.

Figure 2 below compares the generation and discrimination performance of GPT-3.5, GPT-4, and humans. You can see that in 10 of the 13 datasets, there is at least one model that supports subhypothesis 1, with models that are better than humans in terms of generation but less discriminative than humans. Of the 13 datasets, 7 datasets support subhypothesis 1 for both models.

Figure 4 (right) shows OpenCLIP's discriminant performance compared to humans at different levels of difficulty. Taken together, these results highlight the ability of humans to discern the correct answer even in the face of challenging or adversarial samples, but this ability is not as strong in language models. This discrepancy raises questions about how well these models are truly understood.

Does the model understand the results it generates? **

The previous section showed that models are generally good at generating accurate answers, but lag behind humans in the discrimination task. Now, in question-based assessments, researchers ask the model questions directly about the generated content to investigate the extent to which the model can demonstrate a meaningful understanding of the generated content – which is the strength of humans.

As a result, the researchers expect that if the model is compared to a human expert, the performance gap in understanding the content they generate will widen, as the human expert is likely to answer such questions with near-perfect accuracy.

Figure 6 (right) shows the results of a question in visual mode. As you can see, image understanding models still can't compare to humans in accuracy when answering simple questions about the elements in the generated images. At the same time, image generation SOTA models surpass most ordinary people in terms of the quality and speed of generating images (it is expected that it will be difficult for ordinary people to generate similar realistic images), suggesting that visual AI is relatively far behind humans in terms of generation (stronger) and understanding (weaker). Surprisingly, there is a smaller performance gap between simple models and humans compared to advanced multimodal LLMs (i.e., Bard and BingChat), which have some fascinating visual understanding but still struggle to answer simple questions about generated images.